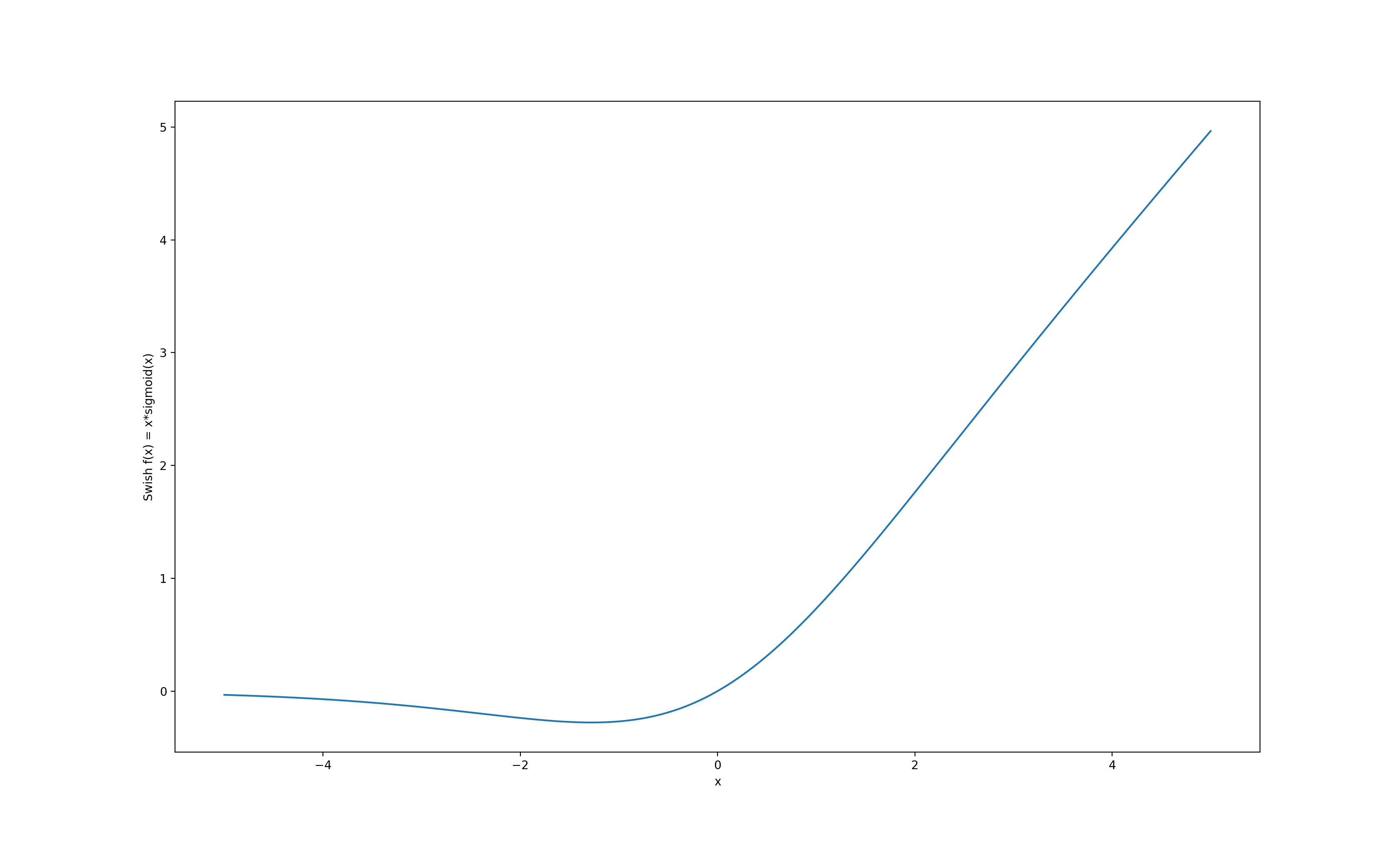

APTx is same at 𝞪 = 1, 𝛽 = 1 and γ = 1⁄2 for MISH for the positive part. The graphs of the activation function and their derivatives have been prepared in a monotonically increasing sequence from -5 to 5 as illustrations. Furthermore, we explore the mathematical formulation of Mish in relation with the Swish family of functions and propose an intuitive understanding on how the first derivative behavior may be acting as a regularizer helping the optimization of deep neural networks.Can use other mappings of APTx by varying 𝞪, 𝛽, and γ for positive and negative part. For instance, the GELU (Gaussian error linear unit) activation function x ( x ) (Hendrycks and Gimpel, 2016), where ( x ) is the standard Gaussian.Using the expression of APTx will speed up the computation part.The derivative and function of APTx requires lesser computation, when compared to expressions used for SWISH, and MISH. I think it might work for values: 𝞪 = 1, 𝛽 = 1/2, γ=1/1.9 or γ=1/1.95Īdvantages/Objective of creating APTx activation function was: positive and negative part mapping for MISH. One can vary 𝞪, 𝛽 and γ to generate the complete i.e. Paper also mentions that- Even more overlapping between MISH, and APTx derivatives can be generated by varying values for 𝞪, 𝛽 and γ parameters The paper shows positive part approximation of MISH at 𝞪 = 1, 𝛽 = 1 and γ = 1⁄2, As at that time we were focussed on the positive gradients only. We can also convert Sech^2 to tanh form, to further reduce the computations required to calculate the derivatives during backward propagation. The performance of Cuda MISH is only 20% slower than ReLU so I guess it’s okay as it is, but perhaps it would be cheaper to use ReLU if running inference on a CPU or a weaker GPU.ĪPTx Activation Function: As can be seen, the two derivatives are very similar, the only change is the parameter instead of the 1. We could also try fine tuning to prune down a model to use fewer neurons. The derivative of Swish is: f (x) f (x) + (x) (1 f (x)) Where (x) sigmoid (x) 1/(1 + exp(x)). I don’t know if such fine-tuning to change activation functions has been tried. This might enable to do the bulk of training with an expensive activation function, but perform inference using a cheaper function such as ReLU or hard-mish. I also had an idea that it would be possible to change the activation functions for a model after training, by running some epochs while gradually lerping from the old to the new activation function. The Cuda MISH or hard MISH would likely be a better option. Their proposed APTx function to approximate MISH turns out to be just SWISH with β = 2, it’s not a distinct function and it’s not very close to MISH for negative x, but it was thought provoking. I noticed this after reading the unpublished APTx paper. X * sigmoid(x) for x = 0 (SWISH with β = 2) I was thinking that SWISH should be more efficient to compute than MISH, and I found this piecewise function based on SWISH which follows MISH closely:

0 kommentar(er)

0 kommentar(er)